5 Best Free Open Source AI Video Upscalers/Enhancers for Windows/Mac/Linux

Not everyone has the budget for high-end AI video enhancers. If you've ever browsed Reddit, GitHub, or video editing forums, you've probably come across people asking the same question: "Are there any good open-source AI tools that can upscale or enhance videos?"

While the commercial options are often polished and powerful, a handful of open-source projects have also gained attention for offering AI-based features like upscaling, deblurring, and denoising — all without the price tag. Some are designed specifically for anime content, while others aim for general video enhancement. But how effective are they in practice? And what kinds of trade-offs come with using them?

This article walks you through some of the most popular free open-source AI video upscalers and enhancers available today, including what they do well, where they fall short, and what to expect during setup and use.

Open-source projects are fantastic, but getting them to run can be a headache. Struggling with Python dependencies, complex command lines, and slow processing speeds shouldn't be part of your creative workflow.

Aiarty Video Enhancer solves this by delivering professional-grade AI upscaling, denoising, deblurring, and more in a simple, user-friendly GUI interface. You can see some test examples in the YouTube video below.

Exclusive Offer: We are currently hosting a Time-Limited Giveaway! You can grab a free license right now and start enhancing your videos immediately.

Popular Free Open-Source AI Video Upscaler/Enhancer

There's no shortage of open-source tools claiming to upscale or enhance video using AI. Some are created by researchers and maintained by GitHub contributors, while others are polished by solo developers for practical everyday use.

Below are a few of the most popular free open-source video upscalers and enhancers that people often turn to. Each has its own strengths, limitations, and quirks you should be aware of before diving in.

Video2X

Supported platforms: Windows (fully supported), Linux (limited support)

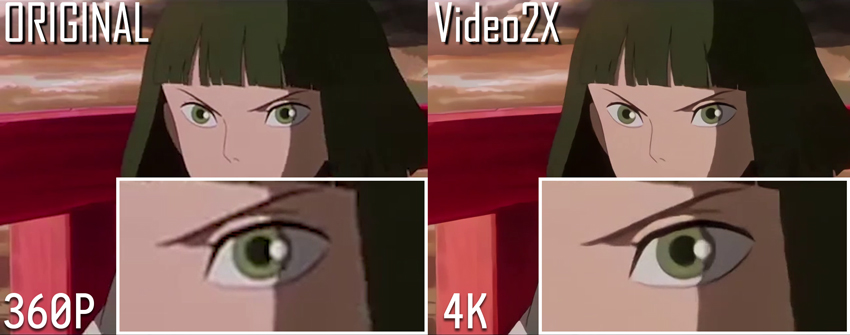

Video2X is a popular open-source tool that upscales videos by extracting individual frames, enlarging them with AI-powered image upscalers, and then reconstructing a high-resolution video using those processed frames.

The process involves several stages:

- Frame Extraction: Video2X first uses FFmpeg to split the video into individual frames and an audio file.

- Frame Upscaling: Each frame is then upscaled using one of the supported AI models like waifu2x, SRMD, or Anime4KCPP. The upscaling models are customizable, allowing users to choose the one that best suits the type of content (e.g., anime vs. real-life footage).

- Video Reconstruction: After the frames are processed, FFmpeg reassembles the upscaled frames and the original audio back into a final high-resolution video.

The process can be slow, especially when processing long videos or when GPU acceleration is not available.

For advanced users who are comfortable with scripting, it's possible to manually write a simple batch or bash script to automate this process, including splitting and merging the video, for even more customization.

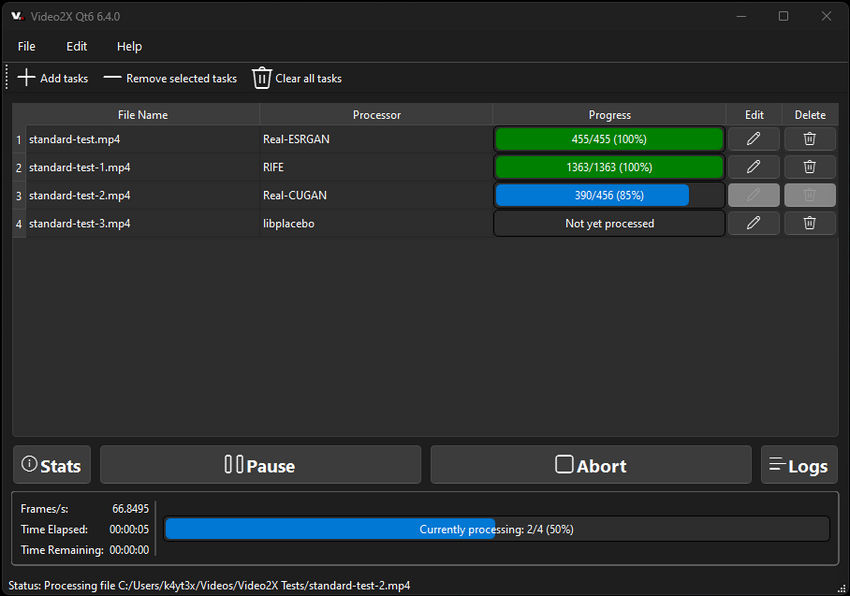

A simple graphical interface is available for Windows users, while Linux users will need to rely on command-line operation.

- Supports multiple AI backends to suit different types of content

- Offers a Windows GUI that simplifies usage for non-technical users

- Batch processing supported for long videos or entire folders

- Free and open-source with no feature restrictions or watermarks

- Processing is slow without GPU acceleration; NVIDIA GPU is strongly recommended

- Audio is not processed along with video and must be reattached separately

- Installation and model configuration can be confusing for beginners

- Linux support is limited and requires manual setup

- This tool doesn't run natively on macOS

Installation & How to Use:

1. Build the Video2X project on your computer

- Check how to build Video2X on Windows: https://docs.video2x.org/building/windows.html

- Check how to build the Qt6 GUI of Video2X on Windows: https://docs.video2x.org/building/windows-qt6.html

- Check how to build Video2X on Linux: https://docs.video2x.org/building/linux.html

2. Download and install Video2X

To run Video2X smoothly, your system needs to meet the following minimum hardware requirements.

CPU

- The precompiled binaries require CPUs with AVX2 support.

- Intel: Haswell (Q2 2013) or newer

- AMD: Excavator (Q2 2015) or newer

GPU

- The GPU must support Vulkan.

- NVIDIA: Kepler (GTX 600 series, Q2 2012) or newer

- AMD: GCN 1.0 (Radeon HD 7000 series, Q1 2012) or newer

- Intel: HD Graphics 4000 (Q2 2012) or newer

Tip: If your computer doesn't have a powerful GPU, you can still use Video2X for free on Google Colab. Google provides access to high-performance GPUs like the NVIDIA T4, L4, or A100, which you can use for up to 12 hours per session.

To install on Windows (Command-line version)

You can grab the latest precompiled release from GitHub. Here's how to install it into your local user directory:

Once extracted, add %LOCALAPPDATA%\Programs\video2x to your system's environment variables for easy access.

To install Windows Qt6 GUI

If you prefer a graphical interface, the Qt6 version of Video2X provides a cleaner experience. Download the installer file—video2x-qt6-windows-amd64-installer.exe—from the releases page, then double-click to launch it.

The setup wizard will guide you through the installation process. You can select the install path and decide whether to create a desktop shortcut.

To install on Linux

Linux users should follow the instructions in the official Linux installation guide.

3. Run the Video2X

You can run Video2X either via command line or through its GUI (on Windows).

To use the command line, refer to the CLI documentation. You'll be able to define input and output paths, AI model such as realesrgan, output option, and more.

Example command:

To use the GUI, just launch Video2X Qt6. Load your video, configure settings such as GPU backend, processing mode, and filters, then click the Run button to start upscaling.

You may also like: In-depth review of Video2X >>

Real-ESRGAN

Supported platforms: Windows, Linux, macOS

Real-ESRGAN is a widely used free open-source AI image and video upscaler developed by the team behind ESRGAN. Built on the PyTorch framework, it offers high-quality image and video upscaling using pre-trained generative adversarial networks (GANs). Although it's originally designed for single image super-resolution, Real-ESRGAN can also be applied to videos by processing frame-by-frame, making it a strong choice for restoring low-resolution or compressed video content.

It performs especially well on real-world scenes — such as landscapes, faces, or low-quality smartphone footage — and is known for its ability to restore fine details while reducing noise and compression artifacts.

- State-of-the-art image enhancement quality, especially for real-world photos and videos

- Multiple pre-trained models available (general, anime, face restoration, etc.)

- Actively maintained and backed by a strong research team

- Can be scripted or integrated into custom video workflows

- No built-in GUI; requires command-line use or manual scripting

- Needs frame extraction and recombination for video use (not automated)

- Requires Python environment and basic familiarity with PyTorch

- Processing can be slow on CPU; GPU is highly recommended for practical use

Installation & How to Use:

1. Install Python and dependencies

- Make sure Python 3.7 or later is installed

- Clone the repo:

- Install required packages

cd Real-ESRGAN

# We use BasicSR for both training and inference

pip install basicsr

# facexlib and gfpgan are for face enhancement

pip install facexlib

pip install gfpgan

pip install -r requirements.txt

python setup.py develop

2. Download pre-trained models

The project supports various models:

- RealESRGAN_x4plus (general use)

- RealESRGAN_x4plus_anime_6B (anime images)

- realesr-general-x4v3 (for compressed images)

Download the desired .pth model files from the GitHub release or provided links.

3. Prepare your video (frame extraction)

Use FFmpeg to extract frames from your input video:

4. Run Real-ESRGAN on extracted frames

Example command (for 4x upscaling):

5. Recombine the frames into a video

Use FFmpeg again:

6. (Optional) Add audio back from the original video

Real-ESRGAN is best suited for users comfortable with Python and command-line tools, or those who want to integrate high-quality upscaling into automated pipelines. While it's more technical to set up than GUI-based options, the visual results often speak for themselves — especially when restoring detail in blurry or low-res footage.

Tip: Just like Video2X, Real-ESRGAN can also run on Google Colab. Check out this video to see how to use Colab to upscale videos to HD or even 4K without needing a powerful local GPU.

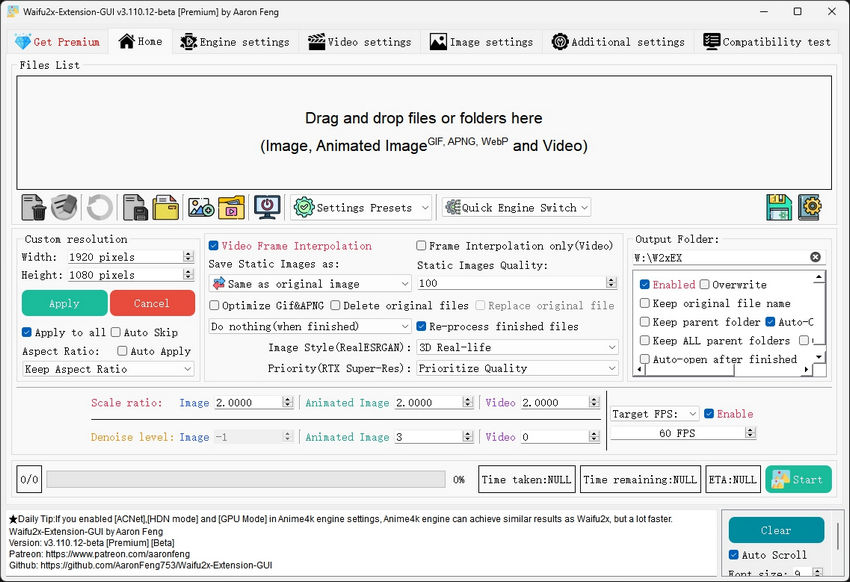

Waifu2x Extension GUI

Supported platform: Windows

Waifu2x Extension GUI is a user-friendly, Windows-only desktop application that wraps several AI upscalers — including waifu2x, Real-ESRGAN, and Anime4K — into a single graphical interface. Originally designed for anime-style images and videos, it has since expanded to support real-world content with additional model options. It’s widely appreciated for being easy to install and use, with no command-line operations required.

Unlike many open-source tools, Waifu2x Extension GUI is built for convenience: it handles frame extraction, upscaling, video reconstruction, and even audio syncing — all within the same interface. This makes it one of the most beginner-friendly options available for AI-based video enhancement on Windows.

- All-in-one GUI that supports image, GIF, and video upscaling

- Includes multiple AI backends (waifu2x-ncnn-vulkan, Real-ESRGAN, Anime4K, SRMD)

- Built-in support for video/audio muxing — no FFmpeg command-line needed

- Offers batch processing and advanced settings for experienced users

- No Python or external dependencies required

- Windows only — no support for macOS or Linux

- Processing can be time-consuming on low-end GPUs

- Model options and update frequency depend on developer maintenance

- Slightly heavier installer (~1.5 GB due to bundled models and dependencies)

Installation & How to Use:

1. Download the installer

Go to the project's GitHub or Gitee release page: https://github.com/AaronFeng753/Waifu2x-Extension-GUI. Choose the latest .exe version (e.g., Waifu2x-Extension-GUI-v...-Installer.exe).

2. Install and launch the program

Run the installer. Once completed, open the application from the desktop shortcut or Start Menu.

3. Load your video

Drag and drop your video file into the program, or click "Add Files" and select your input.

4. Choose upscaling settings

- Select AI model (e.g., Real-ESRGAN, waifu2x-ncnn-vulkan, or Anime4KCPP)

- Set output resolution multiplier (e.g., 2× or 4×)

- Optional: apply denoising, frame interpolation, or artifact reduction

5. Start processing

Click the "Start" button. The app will handle everything automatically: extract frames, upscale, rebuild the video, and sync the audio.

6. Access the output

The upscaled video will be saved in the specified output folder, ready to use or upload.

Waifu2x Extension GUI is ideal for users who want solid AI upscaling results without touching code or setting up complex environments. While it's less customizable than script-based tools, it offers a polished out-of-the-box experience that's hard to beat — especially for anime and low-resolution web videos.

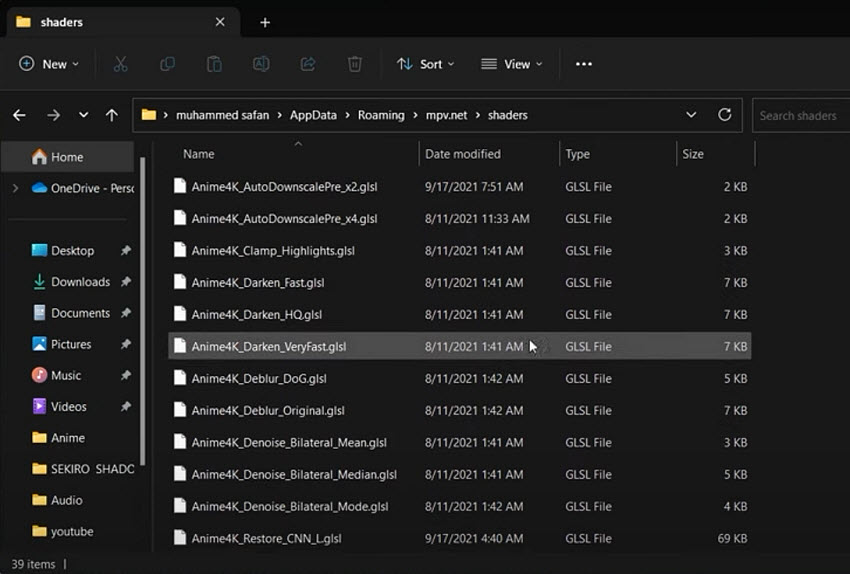

Anime4K

Supported platforms: Windows, macOS, Linux (via video players or custom scripts)

Anime4K is a lightweight, open-source video upscaling algorithm specifically designed for anime and line-art content. Unlike other tools that rely on deep learning or GAN models, Anime4K uses efficient OpenCL or Vulkan shaders that run directly on your GPU. This makes it fast, real-time, and suitable even for low-end hardware.

Rather than extracting and reassembling video frames, Anime4K is typically used within video players like MPV or VLC via shader scripts. It can also be applied offline using FFmpeg filters or command-line tools, but its biggest strength lies in real-time playback enhancement — making old anime or low-res web videos look cleaner and sharper instantly.

- Real-time enhancement with almost no delay

- Extremely lightweight — works on integrated GPUs

- Great for anime, cartoons, and line-art-style content

- Works inside MPV, VLC, or with custom scripts — no separate frame extraction needed

- Cross-platform support

- Not suitable for photographic or real-world video content

- No graphical interface or packaged installer

- Requires manual configuration to integrate with video players

- Output quality isn’t as sharp or detailed as deep learning-based upscalers

Installation & How to Use:

Option 1: Real-time playback with MPV

- Download MPV player from: https://mpv.io/

- Clone or download the Anime4K repository: https://github.com/bloc97/Anime4K

- Place the shader files (e.g., Anime4K_Clamp_Hybrid.glsl) in MPV's shaders folder.

- Edit mpv.conf to activate the shader: glsl-shaders="~~/shaders/Anime4K_Clamp_Hybrid.glsl"

- Open your video with MPV, and the enhancement will apply in real time.

Also read: How to Use Anime4K with MPV, Pros, Cons, Features >>

Option 2: Offline processing with FFmpeg (Advanced users)

Some forks (like Anime4KCPP) provide ways to run Anime4K filters on video files directly, but this requires compiling the tool or using specific FFmpeg builds with shader support — not beginner-friendly.

Anime4K is best suited for anime fans or anyone who wants instant visual improvement for low-res animated content. It doesn't offer the deep restoration capabilities of AI models like Real-ESRGAN or Video2X, but its speed and simplicity make it an excellent choice for playback or lightweight enhancement.

As you can see, open-source video enhancers come in many forms — from user-friendly GUIs like Waifu2x Extension GUI to lightweight shader-based solutions like Anime4K.

While these tools are powerful and cost nothing to use, they also come with certain trade-offs that might be frustrating for beginners or even experienced users.

In the next section, we'll look at some of the most common challenges people face when working with these open-source solutions.

REAL Video Enhancer

Supported platforms: Windows, Linux, macOS

REAL Video Enhancer is an all-in-one desktop application designed for AI-powered video enhancement, including frame interpolation and upscaling. It was created as a modern, cross-platform alternative to older software like Flowframes, offering a user-friendly experience without the need for command-line knowledge.

The application leverages cutting-edge AI models, such as RIFE for video interpolation and Real-ESRGAN for upscaling. It uses highly optimized inference engines like TensorRT and NCNN to maximize performance, especially on modern GPUs. Unlike many GUI tools, REAL Video Enhancer supports all major desktop operating systems, making it a versatile choice for a wide range of users.

- Cross-platform support, available on Windows, Linux, and macOS.

- Highly optimized, using TensorRT and NCNN backends for superior performance on supported hardware.

- Comprehensive features, integrating frame interpolation (RIFE), upscaling (Real-ESRGAN), and video decompression into a single interface.

- User-friendly GUI, providing a simple and intuitive interface for a straightforward workflow.

- Includes advanced features like Discord RPC integration, scene change detection, and a real-time preview of the rendered frame.

- Resource-intensive, requiring a powerful GPU with at least 8 GB of VRAM and 16 GB of RAM for optimal performance.

- Larger download size, with the TensorRT version potentially reaching up to 16 GB due to bundled models and dependencies.

- Specific hardware requirements, as optimal performance is tied to specific GPUs and operating systems that fully support the TensorRT backend.

Installation & How to Use

1. Download the application

Go to the official project page on GitHub: https://github.com/TNTwise/REAL-Video-Enhancer/releases/tag/RVE-2.3.6.

Download the appropriate version for your operating system (Windows .exe, Linux .flatpak, or macOS .dmg).

2. Install and launch

Run the downloaded installer. Follow the prompts to complete the installation. Once finished, launch the application from your desktop or applications folder.

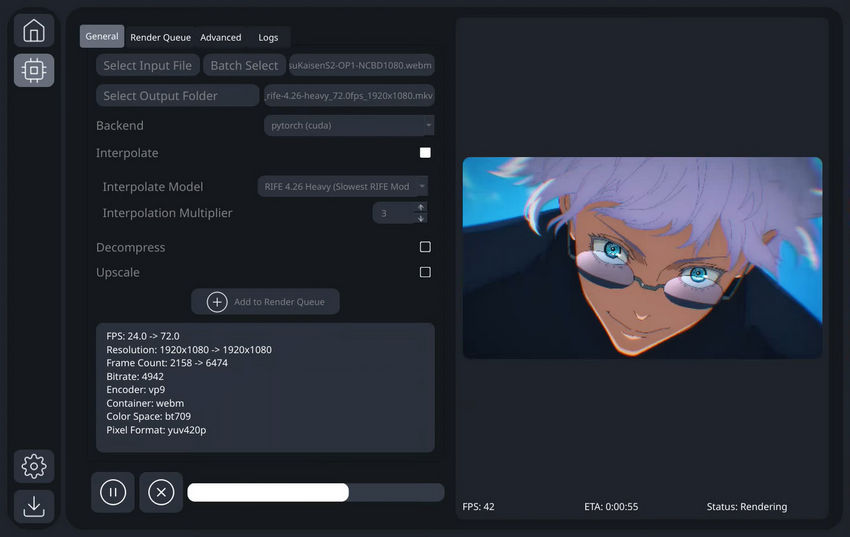

3. Load Your Video File

In the "General" tab, click "Select Input File" or "Batch Select" to import the video(s) you want to process.

4. Configure Processing Settings

- Backend: Choose a suitable backend, such as pytorch (cuda), to utilize your GPU's power.

- Interpolate: Check this box to enable frame interpolation.

- Interpolate Model: Select a model for frame interpolation, like RIFE 4.26 Heavy.

- Interpolation Multiplier: Set the interpolation factor, for example, 3, which will increase the video's frame rate by three times.

- Decompress: Check this box to decompress the video.

- Upscale: Check this box to enable upscaling and select the corresponding model.

5. Add to Render Queue

Once you have configured all your settings, click "Add to Render Queue". You can then manage your pending tasks in the "Render Queue" tab.

6. Start Processing

Click the play button (►) at the bottom to begin the processing task.

REAL Video Enhancer's intuitive GUI makes complex video enhancement operations simple, allowing even beginners to get started with ease.

Common Difficulties When Using Open Source AI Video Enhancers

While open-source AI video upscalers offer impressive capabilities, they often come with a steep learning curve. Many users download these tools expecting a plug-and-play experience, only to discover that getting them to work involves technical steps, dependency management, or hours of trial and error. Here are some of the most common challenges people encounter.

1. Complex Installation and Setup

Many open-source AI video enhancers rely on Python, PyTorch, or other frameworks that require manual installation. You may need to clone GitHub repositories, install dependencies, configure environment variables, or download separate AI models — all before you can even process a single video. If you’re not already familiar with these tools, the process can feel overwhelming.

2. Lack of a Unified Interface

Unlike commercial tools, which typically offer polished interfaces and streamlined workflows, most open-source projects focus on the backend AI functionality. You often need to extract video frames using FFmpeg, upscale them separately, and then manually reassemble the video. GUI options exist, but they're often limited to Windows or may be outdated.

3. Audio Handling is Often Ignored

Many tools focus solely on the visual part of the video and leave audio out of the equation. As a result, users must manually extract and reattach audio tracks using FFmpeg or other tools. This adds yet another step to an already complex workflow and increases the chance of errors like desynchronization.

4. Limited Support and Documentation

Since these tools are often passion projects or research demos, they usually lack formal support channels. Documentation can be inconsistent, outdated, or too technical for beginners. If you get stuck, your only recourse may be searching GitHub issues, Reddit threads, or online forums — which doesn't always yield clear answers.

A Simpler, More Efficient Alternative to Open Source Video Upscaler/Enhancer

If you've ever struggled to get an open-source AI upscaler running, you're not alone. Between installing Python environments, managing model files, and stitching video and audio back together, what should be a simple enhancement task often turns into a weekend project.

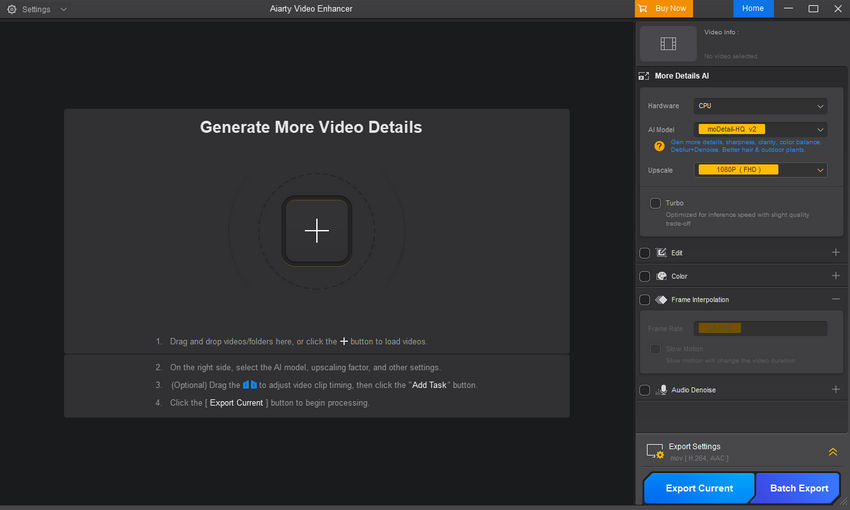

To simplify the entire process, Aiarty Video Enhancer offers a clean, all-in-one solution for video upscaling and restoration — no coding, no command-line tools, and no technical guesswork required.

With Aiarty, you can upscale low-resolution videos to 1080p, 2K, or even 4K in just a few clicks. It also goes beyond basic resolution improvement — removing compression artifacts, reducing noise, deblurring faces, and restoring fine details in everything from old DV tapes to mobile-shot footage. For choppy videos, frame interpolation helps create a smoother look.

Feature Comparison: Aiarty Video Enhancer vs. Open-Source Video Enhancers

While Anime4K is a well-known open-source project, it focuses on real-time playback enhancement rather than pre-processing and exporting videos — making it fundamentally different from the other tools discussed. For that reason, we've excluded it from the comparison table below, which focuses on tools designed for AI-based video upscaling and enhancement through preprocessing.

Here's how Aiarty Video Enhancer stacks up against the most commonly used open-source options:

How to Use Aiarty Video Enhancer to Upscale/Enhance Your Videos

Getting started with Aiarty Video Enhancer is straightforward and requires no prior video editing knowledge. Just follow these simple steps to enhance your videos smoothly:

Step 1: Download and install Aiarty Video Enhancer

Click the button below to download and install Aiarty Video Enhancer on your PC or Mac. The installation process is straightforward and requires no additional dependencies or environment setup.

Step 2: Import your video

Open the program and drag your video file into the main workspace.

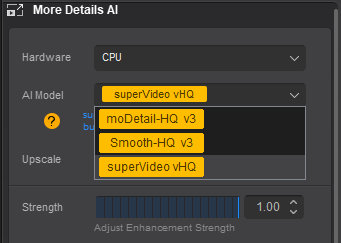

Step 3: Select an AI model that matches your footage

Aiarty provides specialized models for different scenarios. From the AI Model dropdown:

- More Detail-HQ v3: Choose this for videos with plenty of fine detail. It enhances and sharpens textures while maintaining balanced natural colors.

- Smooth-HQ v3: It smooths out imperfections and creates a polished look without over-processing.

- SuperVideo vHQ: Use this for very dark or low-light videos to remove heavy noise and recover hidden details.

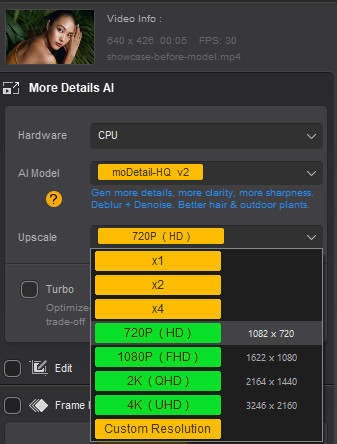

Step 4: Choose your desired upscaling option

Under the Upscale menu, select the resolution that fits your footage and goals.

Tips:

- For many videos, choosing a higher option such as ×4 or 4K delivers an immediate improvement in clarity and detail.

- In some situations, however, upscaling in stages (for example, ×2 followed by another ×2) can produce cleaner and more stable results than jumping straight to ×4 or 4K, especially when working with heavily compressed or older footage.

- If your source video is already high resolution and you mainly want to reduce noise or fix minor blur, selecting ×1 allows you to enhance image quality without increasing the output resolution.

Step 5: Preview the result and fine-tune if needed

Click the Preview button to see a short sample of how the enhanced video will look before exporting.

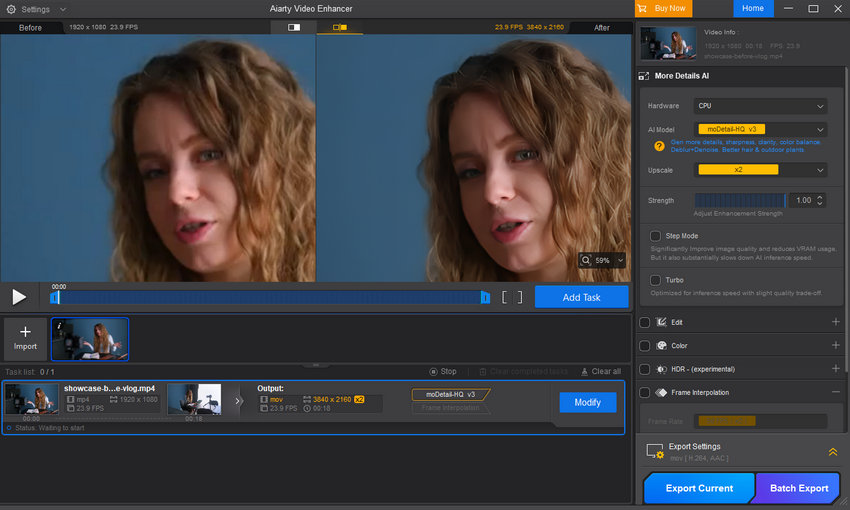

In my test, I chose 2x upscaling, going from 1920x1080 to 3840x2160. As you can see in the screenshot below, the video preview looks excellent — all the noise from the original has been completely removed.

If the preview doesn’t meet your expectations, you can refine the result using the following adjustments:

- Switch to a different AI model to better match your footage type.

- Try another upscaling option, such as step-by-step scaling instead of a single large jump.

- Reduce the enhancement strength if the image appears over-sharpened or unnatural.

- Enable Step Mode to further improve visual quality while significantly reducing VRAM usage (note that this may slow down AI processing).

- Adjust colors if needed by expanding the Color panel and enabling Restore Color or fine-tuning temperature, tint, exposure, contrast, and other parameters.

Step 6: Export the enhanced video

If the preview meets your expectations, add the task to the queue and select either "Export Current" for a single video or "Batch Export" to process multiple videos at once.

Here are two more examples that highlight Aiarty Video Enhancer's capabilities in upscaling and enhancing videos.

Example 1: The source is an old recording clip with heavy grain and color noise. After enhancement, the footage shows noticeable clarity.

Example 2: A 1190x724 anime video was successfully upscaled to 2380x1448, with sharper lines and reduced artifacts.

Conclusion

Open-source AI video upscalers and enhancers offer exciting possibilities for improving video quality without spending a dime. However, as we've seen, they often come with technical hurdles, complex setups, and limited user-friendly features that can slow down or frustrate many users.

For those seeking a more streamlined, hassle-free way to upscale and enhance videos, tools like Aiarty Video Enhancer provide a powerful yet accessible solution. With its easy-to-use interface, comprehensive features, and reliable performance, it bridges the gap between advanced AI technology and practical everyday use.

Whether you're restoring old family videos, cleaning up smartphone footage, or simply want sharper, clearer content for social media, choosing the right tool makes all the difference. Hopefully, this guide helps you make an informed choice and get the most out of AI-powered video enhancement.